MICROSERVICES: WHAT THEY ARE, FEATURES AND BENEFITS

What are microservices?

Microservices are a development approach of software architecture emerged especially to develop the modern Cloud-based applications. Whit that model, applications are broken up into smaller autonomous and independent services (microservices precisely), which communicate among themselves through well-defined API, with the aim to simplify the deployment and provide high-quality software programs swiftly. Indeed, microservices allow to scale easily and rapidly, by promoting the innovation and accelerating the development of new features.

Monolithic systems vs microservices

Microservices are in opposition to the traditional monolithic system used to develop standard applications, according to which each component is created into the same element. But monolithic method has its disadvantages. For instance, with large applications is more difficult to solve issues rapidly and deploy new features. With this kind of approach, all processes are connected together and performed as a single service. That means that when there is a peak in the requests, you need to re-size the entire architecture. Consequently, add or edit features become more complicated, and the experimentation and inclusion of new ideas is limited. At the same time, traditional architectures enlarge the risk of application’s availability , because the presence of strictly connected processes dependent from each other increases the impact of mistakes in a single process.

Microservices approach simplify the resolution of problems and optimize development time. Every process or component constitute a microservice and is performed as an independent service. Microservices communicate through an interface of “light” APIs and interact to complete the same tasks while remaining independent from each other, with the possibility of sharing similar processes among several applications. It is a granular model in which any service corresponds to a business functions and performs only one function. In addition, the independence among services removes the issues about updating, re-sizing and deployment typical of monolithic architectures.

Microservices and containers

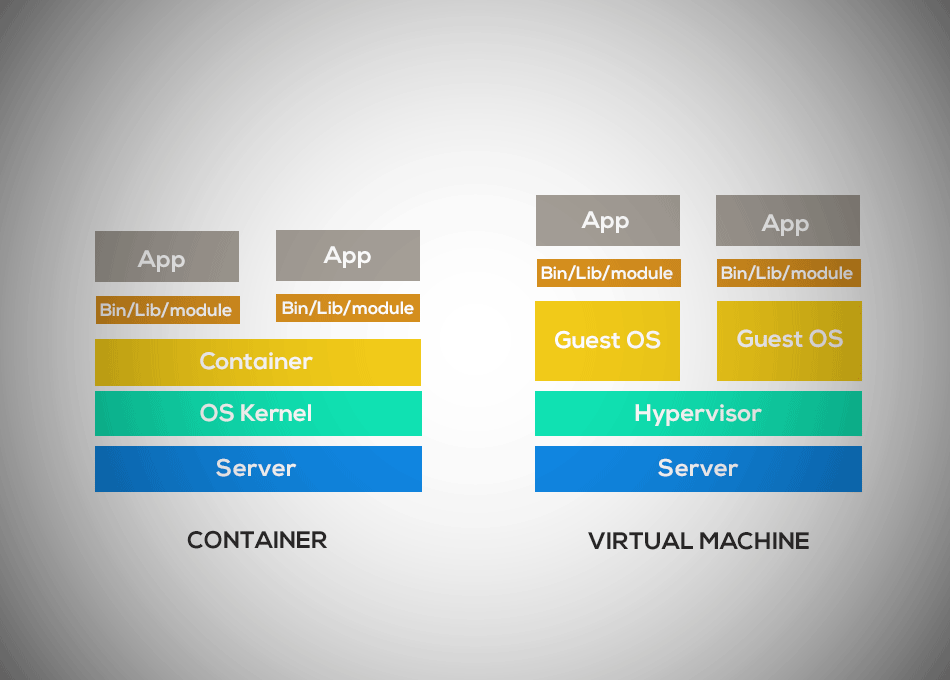

Although the architecture based on microservice is not entirely new, the presence of containers make easier to use it. Containers represent the ideal development environment for application which use microservices because they allow to execute different components of the application independently, on the same hardware and operating a superior control on software lifecycle. Containers provide to microservices a self-sufficient environment in which the management of services, storage, network and security is easier. For these reasons, microservices and containers together form the basis for the development of Cloud-native applications. By entering microservices into containers, you can accelerate development and facilitate transformation and optimization of existing apps.

Benefits of microservices

Microservices, when implemented correctly, enable to improve the availability and scalability of applications. As we have seen above, one of the most compelling aspect of microservices compared to monolithic systems, is that a bug in a single service cannot affect other services and cannot compromise the entire application. This and many others are the benefits of microservices: let’s see them together right now.

- No single point of failure

- High scalability e resilience

- Time-to-market

- Easier Deployment

- Top performance

- Freedom in the use of technology

- More experimentation and innovation

- Re-usable code

- Flexibility of development language

- Greater agility of system

… and disadvantages

Don’t forget that microservices, although they represent an innovative and performing development model, have also disadvantages. Those include the complexity associated to all distributed systems, the need of more solid testing protocols and the requirement of experienced teams to manage processes and provide technical support. Besides, if the application doesn’t need to scale rapidly or is not Cloud-based, the development architecture might gain poor benefits from microservice.

Contact us

Fill out the form and one of our experts will contact you within 24 hours: we look forward to meeting you!