MULTI-CLOUD: HOW TO MANAGE CLOUD INFRASTRUCTURES

The cloud has reshaped the way we do business. Thanks to this technology, companies have had the opportunity to upgrade system management as well as their overall services. Unfortunately, many companies still don’t use this technology to rise to their full potential.

Decision-makers in many organizations are helpless in front of too many technical details shared online, which, most of the time, remain too hard to understand by the non-tech savvy. That’s why we decided to write a practical guide to multi-cloud, in which we focus on the services offered by cloud technology.

An increased number of companies have decided to replace the limited possibilities of a single cloud system with multi-cloud, as it’s faster and more effective.

As a result, they registered a 75% growth from the previous year. However, experts recommend a small-step approach to multi-cloud, which allows companies to learn gradually about the various functions of this technology.

What is multi-cloud?

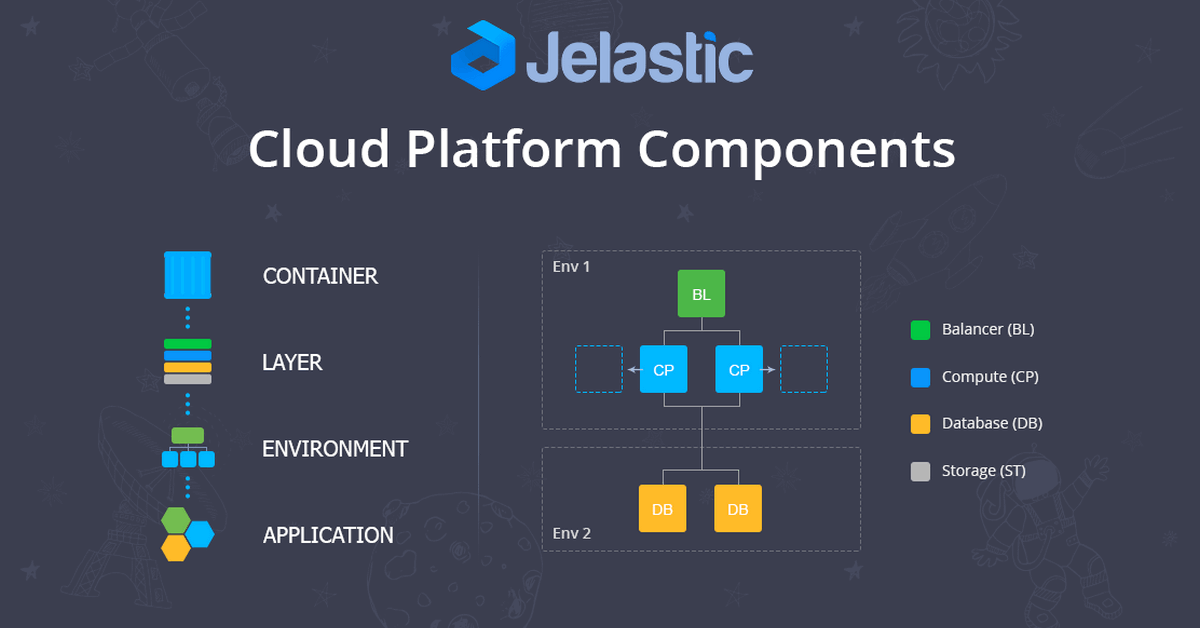

The multi-cloud system operates on more public clouds, sometimes offered by multiple third-party providers. The main advantage of this environment is its flexibility, as it can adapt to carry out different tasks in total autonomy.

It’s an ambitious goal, as the system is aiming to connect different types of software or apps (for example, using advanced API or RESTful). At the same time, it should reduce or abolish the so-called vendor lock-in which is the relationship of dependency established between the provider (that tends to tie customers to specific services) and the beneficiaries of the service.

The ideal multi-cloud service provider

Unfortunately, there’s no one-size-fits-all provider of multi-cloud services. But, various general criteria can guide you to the perfect choice. In the era of big data and the Internet of things (IoT), companies are pressed by the need to improve performances on a medium and large scale. This involves the continuous design and re-definition of the architectures that guide various systems. Under this light, the use of the multi-cloud becomes necessary to streamline operations and make them smart.

Multi-cloud service providers must be able to offer a high performing and adequate network infrastructure, based on the Fault-Tolerant paradigm. Therefore, it can perform disaster recovery and fast recovery of backup, as well as ensure a low probability of breakdowns or inefficiencies during use.

Before entrusting yourself to the first provider you find, you should check that it meets these requirements, as well as whether it has qualified technicians available to solve any potential problems. Otherwise, you risk finding yourself handling an ineffective cloud, which is blocked and difficult to manage. You can learn more about the advantages of an effective multi-cloud service by checking out our multi-cloud offer.

The benefits of multi-cloud systems

Not only does the multi-cloud give you the possibility of customizing services, but it also tends to enhance workload distribution on multiple nodes of the network, minimizing the risks of congested nodes. As you distribute the work differently, you speed up packet delivery on the network and improve routing management. These features open up scenarios that were impossible to imagine a few years ago.

The multi-cloud has become fundamental for fast and hard-to-predict technological development. As a consequence, companies need to develop an ability to adapt quickly to new technologies, too, to stay competitive and meet the needs of potential customers.

Contact us

Fill out the form and one of our experts will contact you within 24 hours: we look forward to meeting you!