How to optimize the hosting of SaaS applications

Every day, software houses and system integrators are wondering how they can benefit from the adoption of cloud hosting services for the development and distribution of their SaaS software.

SaaS application providers realize quickly that owning and operating the infrastructure on which they are hosted can be expensive and complex, especially in uncertain’s customer demand.

Main problems of SaaS infrastructures

Years of work in contact with a number of companies providing SaaS solutions have led CriticalCase to identify – and fix – some key issues:

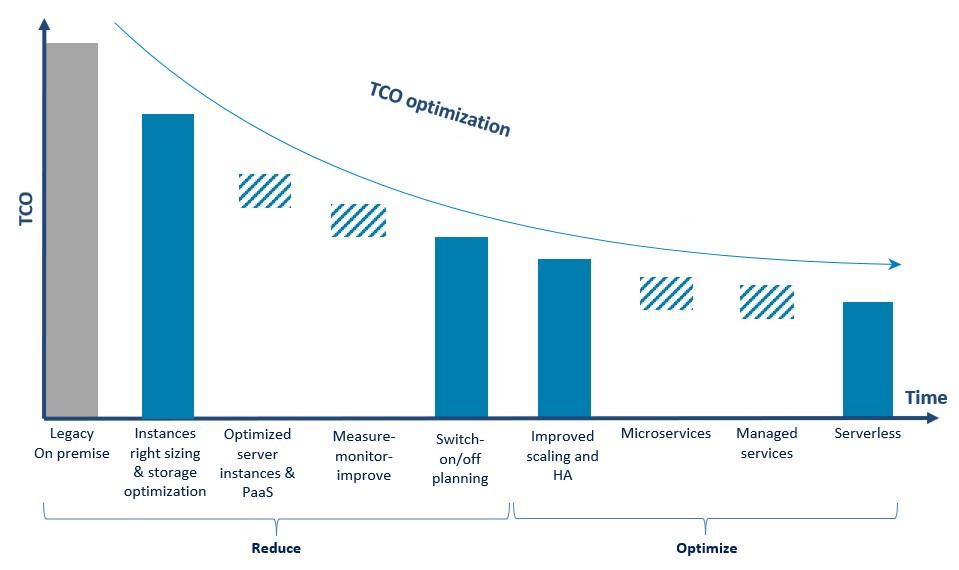

- sizing: correctly size the environment in which to host the software could be an hard work that need specifics skills. In both cases, over-sizing or under-sizing, this can generate additional critical issues related to performances and costs.

- costs: the capital investments associated with hardware and software purchasing, setting up and managing local data centers, which require server racks, round-the-clock electricity for power and cooling, IT experts for infrastructure management – they are quite large;

- performances: to achieve high performances, continuous resources are required to regularly update the hardware to the latest generation;

- speed: in case of spikes, large amounts of computing resources may be needed in a few minutes to mantain the system up&running, not easy to achieve

- Productivity: an on-premises data center typically requires a significant amount of rack organization and assembly effort, which includes hardware configuration, software patching, and other time-consuming IT management tasks;

- Reliability: you must always be able to provide data backup, disaster recovery and business continuity to customers;

- security: independently managing the infrastructure of your SaaS means guaranteeing policies, technologies and controls that strengthen overall security behavior, thanks to the protection of data, apps and infrastructure from potential threats.

Why delegate the hosting of your SaaS clouds?

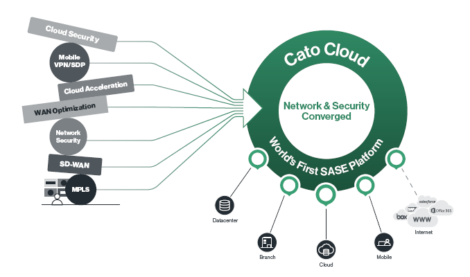

For companies that develop and market SaaS applications, delegating cloud hosting to a third party provider such as Criticalcase means first and foremost generating efficiency through the separation of duties between the developer of the SaaS applications and the hosting service provider.

The software house deals only with software development, while Criticalcase offers the infrastructure on which to maintain it, while generating other essential advantages:

- free TRIAL / POC services and a discount in case of service overlap periods: for example, to the customer who wants to switch to Criticalcase hosting but still has a 2-month contract with the old provider, Criticalcase offers 2 months of free service to carry out migration, without having to pay a double fee;

- guarantees the SaaS developer markup in software reselling, as no public CriticalCase prices on its website;

- provides a free development environment;

- manages the daily maintenance, SaaS allowing the developer to focus on the objectives of its core business: in particular, the IT team no longer have to worry about software installations, licenses, updates and maintenance;

- ensures rapid commissioning and immediate provision of services to customers, to more readily launch new services on the market;

- allows you to implement SaaS creators’ applications in various data centers, improving agility for users at the expense of latency;

- provides backup, monitoring and performance solutions to ensure total continuity of the customer’s business.

The added value generated by the service and support from CriticalCase results for the customer:

- a tailor-made Hosting package, in which storage and reservation are defined together according to needs;

- Four touchpoints (presales, sales, support, CTO) to manage every needs at every level, and 24-hour 7/7 telephone assistance;

- revenue sharing invoicing;

- consultancy, planning and coaching, even in advance, through co-marketing initiatives to support the sale of the SaaS solution;

- reporting to the partner of potential customers.

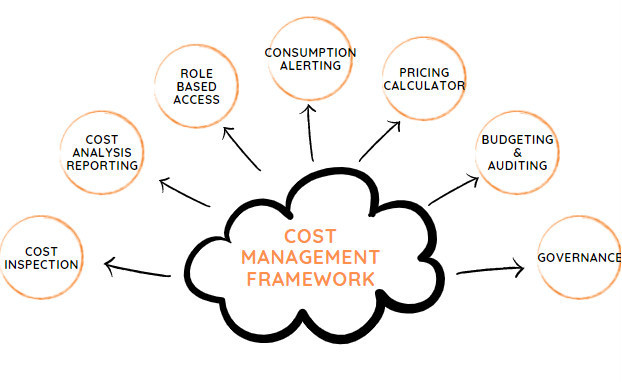

So, if from an economic point of view the software is transformed from Capex into Opex, from the point of view of governance all management problems are outsourced: capacity planning, installation, maintenance, updates, security, license management (with the various updates associates), hardware alignment, dedicated staff.

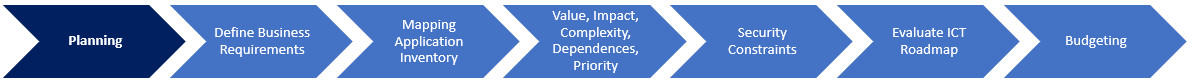

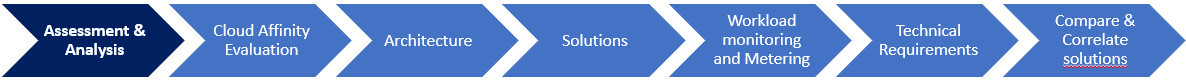

Whether you are a company looking for a cloud environment in which to deploy its existing on-premises solutions, or an application provider looking for a cloud platform on which to deploy a new application or SaaS offering, Criticalcase’s experience will help you.

We can help you transition to the cloud by answering questions such as:

- Can I use the programming language and application platform of my choice?

- Can I use the operating system and environment in which my applications are already distributed?

- How quickly can I respond to my customers’ demand peaks and pauses or application workloads?

In summary: higher productivity, more speed, greater savings and risk reduced.

Don’t give up the benefits of Criticalcase hosting for your SaaS software, contact us now to find out more!